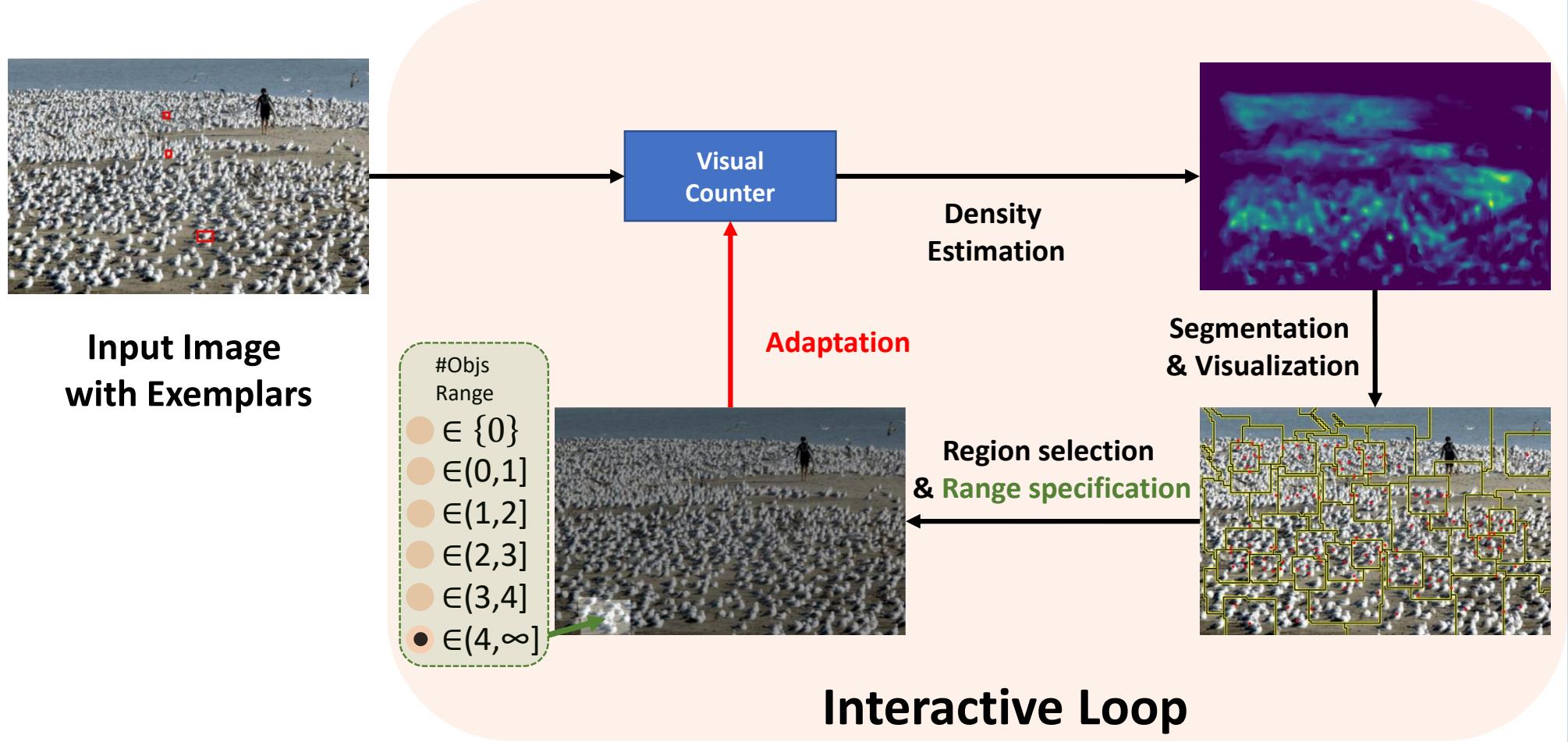

We propose a novel framework for interactive class-agnostic object counting, where a human user can interactively provide feedback to improve the accuracy of a counter. Our framework consists of two main components: a user-friendly visualizer to gather feedback and an efficient mechanism to incorporate it. In each iteration, we produce a density map to show the current prediction result, and we segment it into non-overlapping regions with an easily verifiable number of objects. The user can provide feedback by selecting a region with obvious counting errors and specifying the range for the estimated number of objects within it. To improve the counting result, we develop a novel adaptation loss to force the visual counter to output the predicted count within the user-specified range. For effective and efficient adaptation, we propose a refinement module that can be used with any density-based visual counter, and only the parameters in the refinement module will be updated during adaptation. Our experiments on two challenging class-agnostic object counting benchmarks, FSCD-LVIS and FSC-147, show that our method can reduce the mean absolute error of multiple state-of-the-art visual counters by roughly 30% to 40% with minimal user input.

We propose a practical approach for visual counting based on interactive user's feedback. In each iteration: (1) the visual counter estimates the density map for the input image; (2) the density map is segmented and visualized; (3) the user selects a region and provides a range for the number of objects in the region; (4) an objective function is defined based for the provided region and count range, and the parameters of a refinement module are updated by optimizing this objective function.

If you have any questions regarding our project, please feel free to contact yifehuang@cs.stonybrook.edu

@inproceedings{m_Huang-etal-ICCV23,

author = {Yifeng Huang and Viresh Ranjan and Minh Hoai},

title = {Interactive Class-Agnostic Object Counting},

year = {2023},

booktitle = {Proceedings of the International Conference on Computer Vision (ICCV)},

}